What is S3?

S3 provides secure, reliable and scalable object storage in the cloud. It allows you to store and retrieve any amount of files and data from anywhere on the web at a low cost. S3 is made in order to be very simple to use. Object storage implies that data is stored as objects rather than file systems or data blocks. This means you can upload any file type. However, it cannot be used to tun an operating system or database.

S3 provides a couple of important features.

- Unlimited storage

- Object up to 5 TB in size - a given object can be between 0 bytes and 5 terabytes

- Buckets - this can be thought of as folders where a user would store objects or other sub-buckets

Buckets

S3 buckets has a universal namespace. This means that your bucket name needs to be globally unique. Ie. you probably can’t register example as a name as it is already taken. A typical S3-url will look like https://<bucket-name>.s3.<region>.amazonaws.com/<file>. When uploading files to S3 you will receive a 200-status message if the upload was successful.

S3 works like a key-value store. The key is the name of the object, while the value is whatever data is stored in the object. Further S3 stores some information about the version, or Version ID, which is useful if the users stores multiple versions of the same object, and some metadata like content-type, last-modified, etc. Version ID has to be explicitly enabled.

S3 stores your files across multiple devices and facilities to ensure availability and durability. Amazon claims that S3 will give you 99.95% - 99.99% service availability depending on the S3 tier. However, it appears that once the status dashboard is down they don’t count downtime precisely, so take it with a grain of salt. Some information here. The same goes for durability - Amazon claims 99.999999999% durability of the data you store. Which means data wont be lost.

S3 is tuned for frequent access. Further, data is stored accross multiple availability zones. It is suitable for most workloads, and is commonly used for website content, content distribution, mobile and gaming applications and big data analytics. S3 comes in different tiers and includes tools for automatically transitioning between these tiers depending on usage. S3 scales automatically on demand.

Security

A bucket can have a default encryption. This means all files are encrypted once it is uploaded. S3 buckets also contains Access Control Lists (ACLs). This controls which accounts and groups are granted access to buckets and whether they can read or modify the data. It is possible to attach ACLs to individual objects as well. Bucket policies control what actions are allowed or denied for a given user or group. Eg. Rob can PUT objects, but not DELETE. The biggest difference between ACL and policies is that ACLs works on individual objects, whereas policies works on entire buckets.

S3 uses Strong Read-After-Write Consistency. This implies after a successful write of a new object or an overwrite, any subsequent read will immediately receive the latest version of that object. Further if a user were ti list the object directly after a write, they will receiver the updated listing. This is known as strong consistency.

The Block Public Access setting

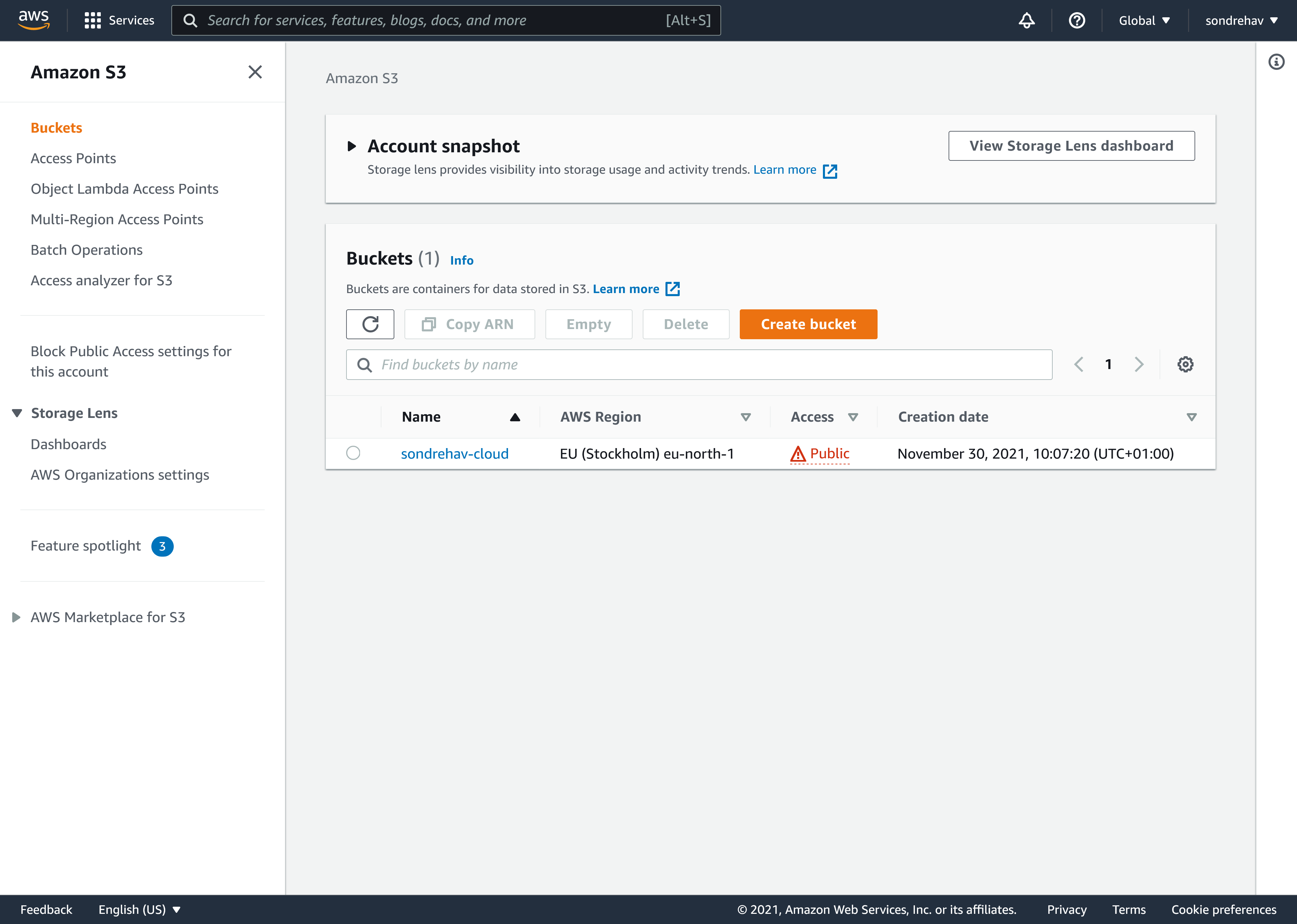

The S3 dashboard with one S3 instance

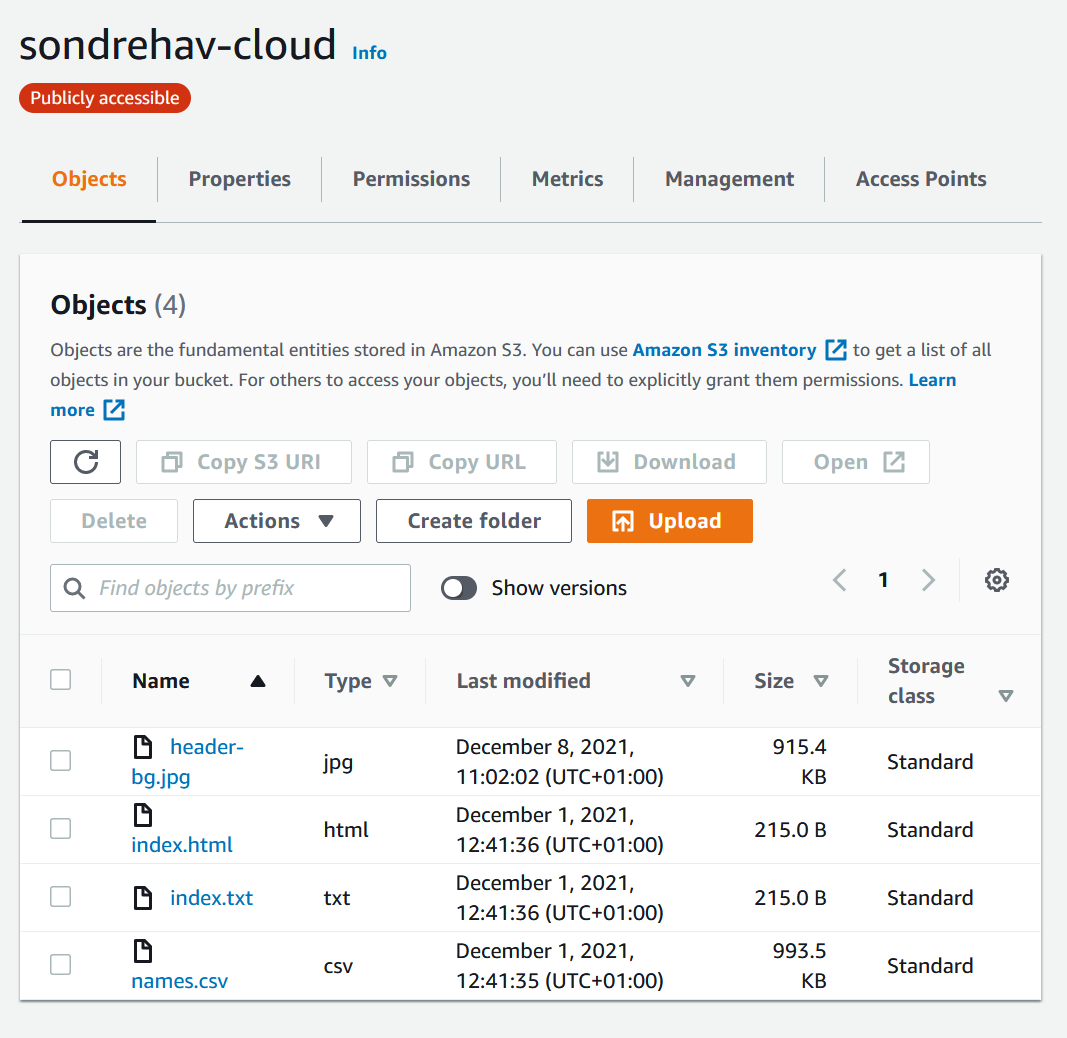

Bucket content with files

When you create a bucket you will be prompted with a few different configuration settings.

- Bucket name - the name of the bucket which needs to be globally unique

- AWS Region

- Object ownership - whether or not you want the bucket to allow ACLs or not. If ACLs are enabled, objects in the bucket can be owned by other AWS accounts, and their access will be described by the ACLs. If ACLs are disabled access to objects are only specified using bucket policies.

- Block all public access or block specific types of access - if this is turned on all public access is blocked. In order to use the bucket with this setting turned on, any instance accessing the data needs appropriate security group settings enabled.

- Bucket versioning - wheter or not to include version information on the obejcts

- Encryption

Once the bucket is created you will have the bucket listed on the dashboard. Entering this bucket will present you with the ability to upload files and edit buckets. If a file is public, you will be able to get a URL for the object and access it from anywhere.

Static website hosting

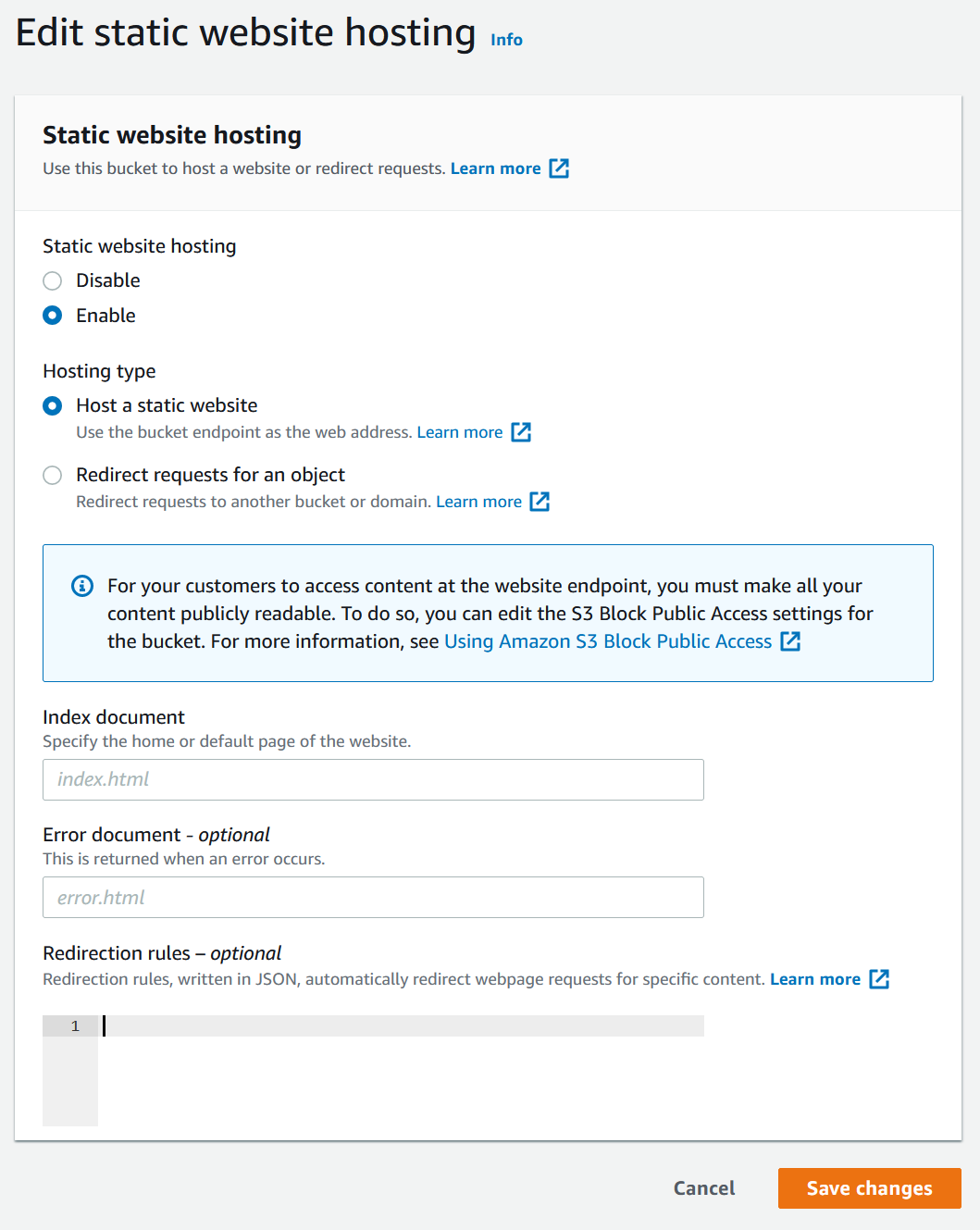

Static website hosting

Under the Properties tab it is possible to enable static website hosting. This means that the S3 bucket will be able to serve HTML-pages with index.html and error.html. In order to use static website hosting you will need users to be able to access the resources. This means you will either need to turn off the block public access setting, set up appropriate ACLs or serve it from for example an EC2 instance with the appropriate security settings.

Versioning

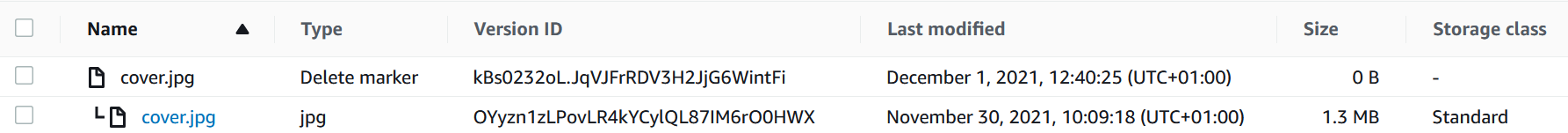

An example of a deleted image object

Versioning a S3 object includes some advantages and characteristics.

- All versions are stored in S3 which means you can access overwritten content and even deleted content

- Backup of content

- Versioning cannot be disabled once it is turned on

- Lifecycle rules

- Multi-factor authentication settings for changing versions and deleting objects

Once versioning is enabled you will be able to view old versions and deleted content if you tick the Show versions toggle. An example of a deleted object can be seen in the screenshot above. Old versions of objects are by default not public. In order to restore an object, you’ll have to delete the delete marker. The same goes for restoring an older version - in order to restore you’ll have to delete the every version that is more current than the obejct you wish to restore.

Storage tiers

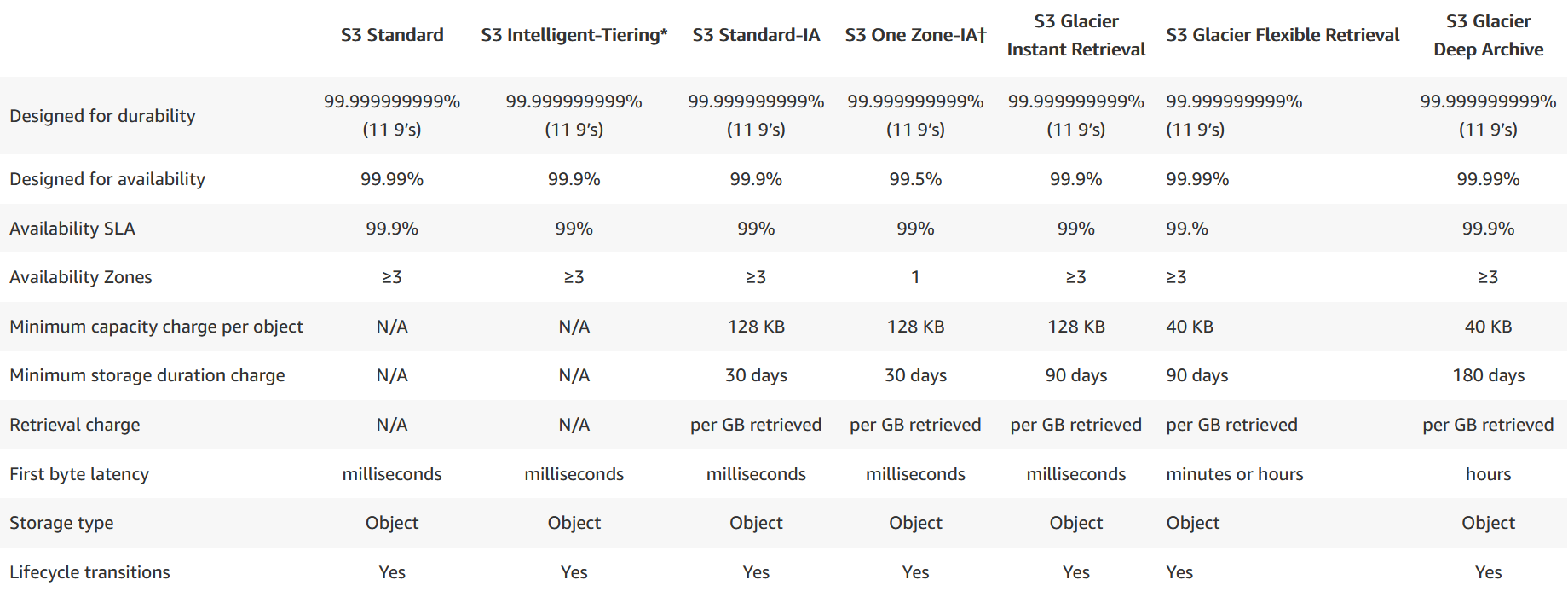

S3 tier comparison

S3 comes in a couple of different storage tiers.

- Standard - Designed for high availability and durability and is stored in at least 3 availability zones. It is designed for frequent access and is suitable for most work loads.

- Standard-Infrequent Access - Designed for infrequestly accessed data which requires rapid access when needed. In this tier you pay to access the data, but it has a low per-GB storage price. Suitable for long-term storage, backups and disaster recovery.

- One Zone-Infrequent Access - Like Standard-Infrequent Access, but only in one AZ. It costs 20% less and is typically used for non-critical data.

- Glacier and Glacier Deep Archive - These tiers is used for long-term data archiving with long retrieval times. The Glacier retrieval time range from a minute to 12 hours, while Glacier Deep Archive data in 12 hours by default. In these tiers you pay each time you access data, but they are optimized for cheap storage.

- Intelligent-Tiering - Automatically determines the most appropriate tier based on access patterns. This tier is fully automated.

A full comparison can be seen in the image above. The data is from here.

Lifecycle management

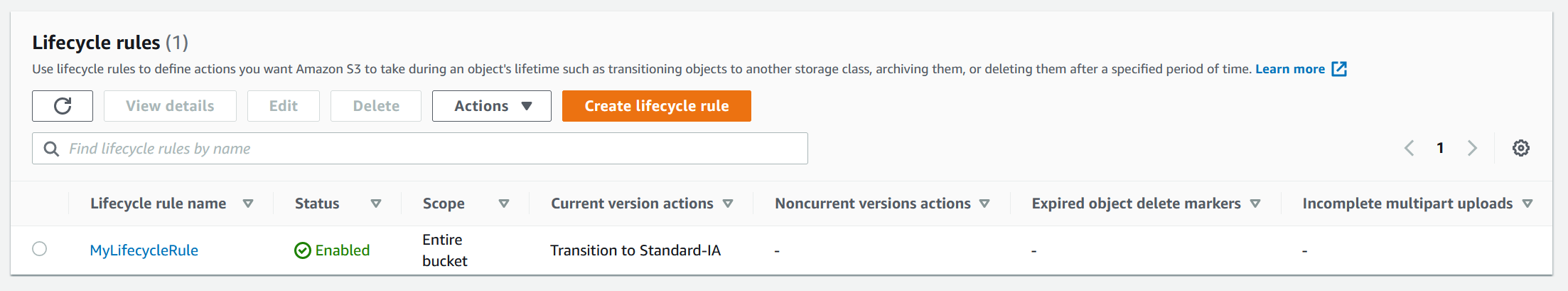

Lifecycle management (found under Management on the dashboard)

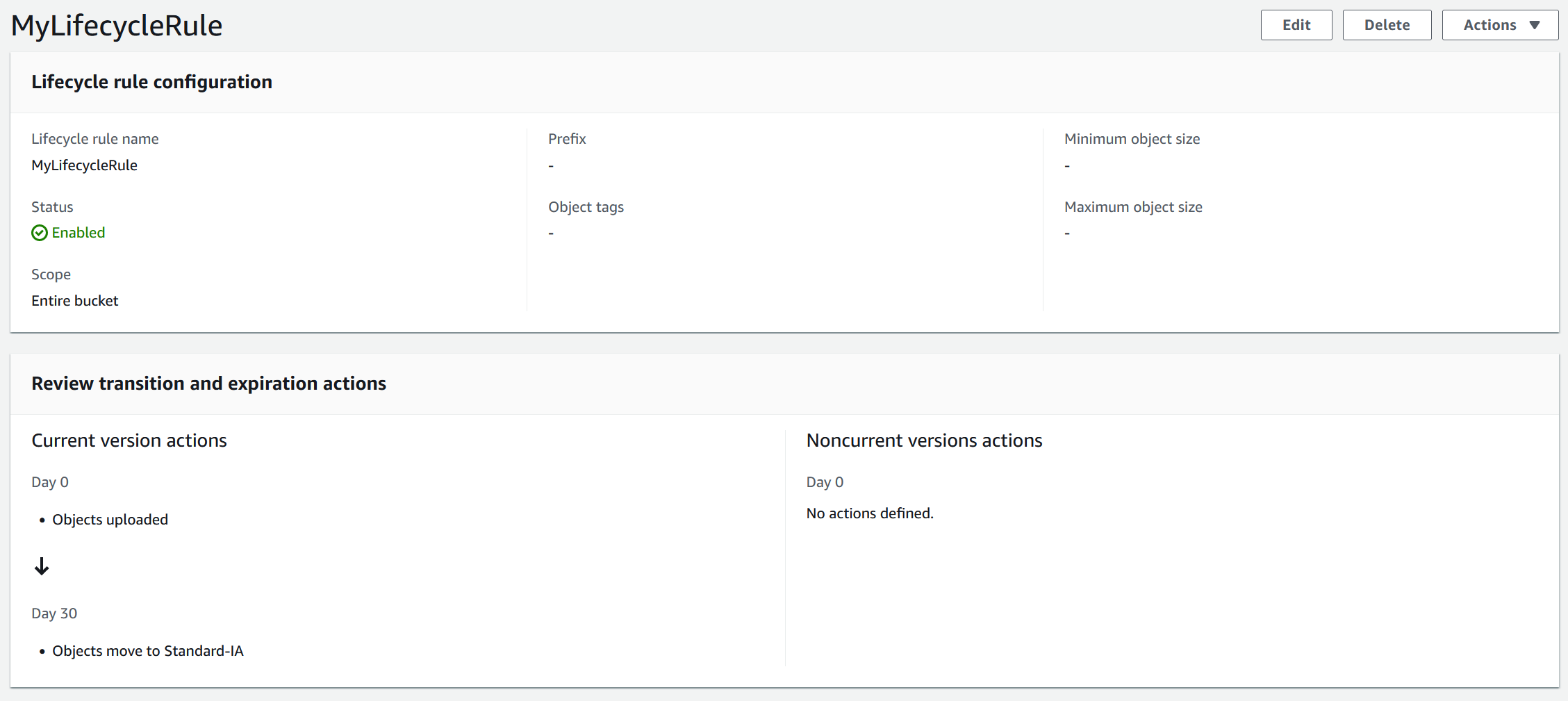

Lifecycle configuration and actions

Lifecycle management in S3 automates moving objects between sotage tiers, and thus maximizing the cost effectiveness of the bucket. You can also combine lifecycle management with versioning, eg. store old versions in a different tier than the current data.

There are a couple of options available when specifying the lifecycle rule.

- Name

- Scope which determines what object this lifecycle rule will affect. This includes All objects in this buckets or Limit the scope of this rule using one or more filters. Filters will look at things like prefix, object tags, object size or other combinations in order to determine what files are included. Further object size can be restricted, which is useful when using Glacier as it has a per-object cost.

- Lifecycle rule actions describes what action AWS will take once some condition are met (eg. when the object are older than 30 days).

Object lock

S3 Object Lock enables you to store objects using a write once, read many (WORM) model. It helps to prevent objects from being written to or deleted for a fixed amount of time or indefinetely.

Object Locks have different modes. In Governance Mode users can’t overwrite or delete an object unless they have special permissions. In Compliance Mode no-one, including the root user, can edit or delete that file. When an object is locked in this mode, it’s retention period can’t be shortened and it’s retention mode can’t be changed.

Retention perionts protects an object version for a fixed abount of time. After the retention period expires the object version can be overwritten or deleted, unless if you have placed a legal hold on the object version. A legal hold does not have an associated retention period and remains in effect until it is removed from the object. It can be placed and removed by any user that has the s3:PutObjectLegalHold-permission.

Glacier Vault Lock allows you to deploy and enforce compliance controls for individual Glacier vaults with a vault lock policy. Once locked, the policy can no longer be changed.

Encryption

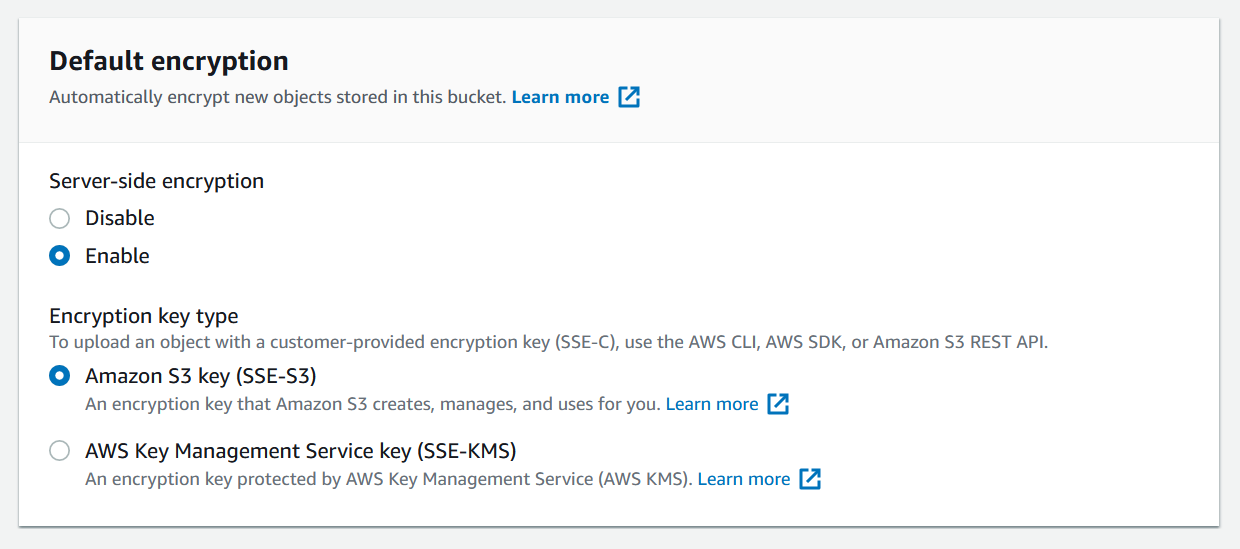

Configure encryption

S3 comes with different encryption types.

- Encryption in Transit implies that SSL/TLS and HTTPS is employed to encrypt data when you upload or download data

- Encryption at Rest: Server-Side Encryption implies that objects are encrypted once it is stored. It comes in three flavors - SSE-S3 (S3 managed keys with AES 256-bit encryption), SSE-KMS (AWS Key Management Service-managed keys) and SSE-C (you provide the keys)

- Encryption at Rest: Client-Side Encryption implies that you encrypt the files yourself before uploading to S3.

There is two ways to enforce server-side encryption - console or bucket policy. When uploading files with a PUT-request the header x-amz-server-side-encryption will be included in the request header. It can take the value AES256 for SSE-S3 or aws:kmz for SSE-KMZ. When this header is set, it tells S3 to encrypt the object at the time of upload with the specified method. You can turn on default encryption by going to Properties > Default encryption > Edit. Then you will be presented with the form in the image above.

Optimizing performance

S3 prefixes is anything that comes after the bucket name in an URL but before the file name. So in sondrehav-cloud/folder/subfolder/image.jpg, /folder/subfolder is it’s prefix. This can be used for optimizing access to objects. S3 has very low latency where you can get the first byte from a object in 100-200 milliseconds. You can also achieve a high number of requests per minute. 3500 for PUT/COPY/POST/DELETE and 5500 for GET/HEAD per prefix. So you can get better performance by putting reads across multiple different prefixes. For example with 2 prefixes you would achieve double the performance.

When using SSE-KMS you must keep in mind the KMS limits. When uploading files you will call GenerateDataKey in the KMS API and downloading files you will call Decrypt in the KMS API. Uploading and downloading will count in your KMS quota and currently you cannot request a quota increase for KMS. KMS is region specific.

Multipart uploads are recommended for files over 100 MB and required for files over 5 GB. It splits files in multiple parts and uploads them in parallel. S3 Byte-Range Fetches parallelize downloads by specifying byte ranges. If there is a failure it is only for a specific byte range.

Backing up data with S3 replication

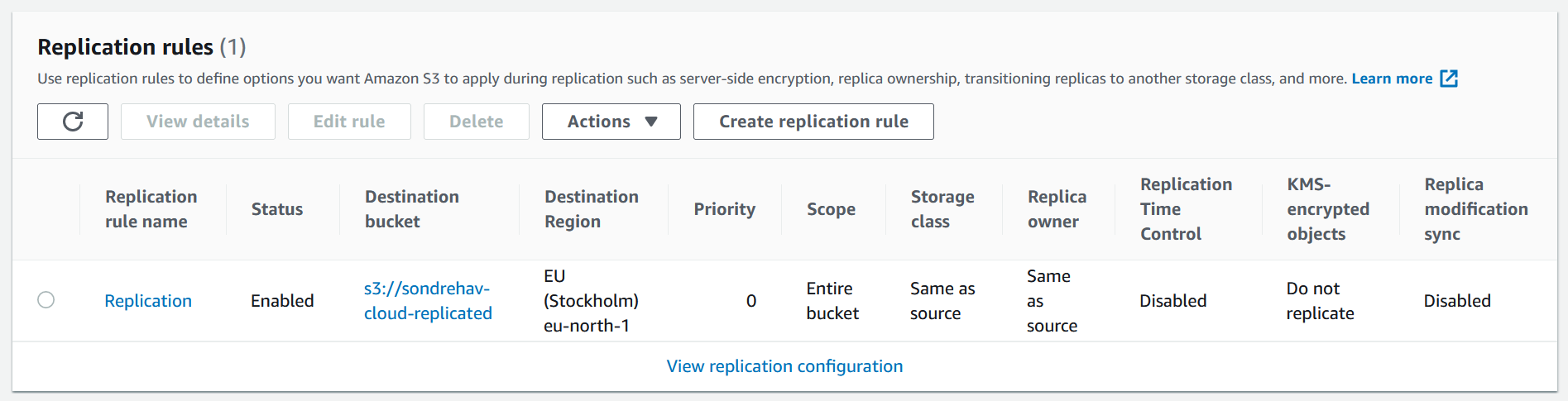

Replication rule table

S3 replication is a way to replicate data from one bucket to another. In order to use replication versioning must be enabled. Objects in an existing bucket are not replicated automatically, but once turned on all subsequent objects will be replicated. Delete markers is only optionally replicated, but not by default. The bucket can either be in the same region or different regions, and objects in an existing bucket are not replicated automatically.

You all replication lifecycle rules by going to the management tab under a S3 bucket. There you will find the table Replication rules.